Self managed EFK Setup for 10 billion+ documents

TL;DR:

When running multiple applications and services on a Kubernetes cluster, it makes more sense to stream all of your application and Kubernetes cluster logs to one centralised logging infrastructure for easy log analysis.

Background:

One of financial institution looking for the centralised open source logging platform, on which they would onboard multiple products. All these product journey logs will be stored for 2 months to access. Older than 2 month logs will be archived to AWS S3 bucket and can be restored at any given point in time.

What is EFK Stack?

- Elasticsearch is a distributed and scalable search engine commonly used to sift through large volumes of log data. It is a NoSQL database based on the Lucene search engine (search library from Apache). Its primary work is to store logs and retrive logs from fluentd.

- Fluentd is a log shipper. It is an open source log collection agent which support multiple data sources and output formats. Also, it can forward logs to solutions like Stackdriver, Cloudwatch, elasticsearch, Splunk, Bigquery and much more. To be short, it is an unifying layer between systems that genrate log data and systems that store log data.

- Kibana is UI tool for querying, data visualization and dashboards. It is a query engine which allows you to explore your log data through a web interface, build visualizations for events log, query-specific to filter information for detecting issues. You can virtually build any type of dashboards using Kibana. Kibana Query Language (KQL) is used for querying elasticsearch data. Here we use Kibana to query indexed data in elasticsearch.

- ElasticHQ is an open source application that offers a simplified interface for managing and monitoring Elasticsearch clusters.

EFK Architecture:

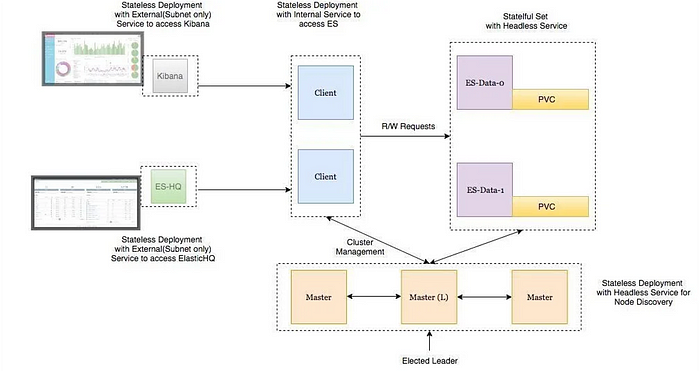

Here there are total 4 components which will get deployed as part of EFK setup:

- Fluentd:- Deployed as daemonset as it need to collect the container logs from all the nodes. It connects to the Elasticsearch service endpoint to forward the logs.

- Elasticsearch:- Deployed as statefulset as it holds the log data. We also expose the service endpoint for Fluentd and kibana to connect to it.

- Kibana:- Deployed as deployment and connects to elasticsearch service endpoint.

- ES-HQ: Deployed as stateless deployment and connects to elasticsearch service endpoint in order to monitor and fetch the details of cluster health.

Setup EFK Stack on Kubernetes:

- Clone repo: https://github.com/sagyvm/efk.git

- Go to directory: helm-charts/elasticsearch

- Generate the ssl certs for elasticsearch and create k8s secrets.

docker run --name elastic-helm-charts-certs -i -w /tmp \ docker.elastic.co/elasticsearch/elstic-with-s3:7.9.2 \

/bin/sh -c " \

elasticsearch-certutil ca --out /tmp/elastic-stack-ca.p12 --pass '' && \

elasticsearch-certutil cert --name security-master --dns security-master --ca /tmp/elastic-stack-ca.p12 --pass '' --ca-pass '' --out /tmp/elastic-certificates.p12" && \

docker cp elastic-helm-charts-certs:/tmp/elastic-certificates.p12 ./ && \

docker rm -f elastic-helm-charts-certs

openssl pkcs12 -nodes -passin pass:'' -in elastic-certificates.p12 -out elastic-certificate.pem && \

openssl x509 -outform der -in elastic-certificate.pem -out elastic-certificate.crt && \

kubectl create -n development-tools secret generic elastic-certificates --from-file=elastic-certificates.p12 && \

kubectl create -n development-tools secret generic elastic-certificate-pem --from-file=elastic-certificate.pem && \

kubectl create -n development-tools secret generic elastic-certificate-crt --from-file=elastic-certificate.crt && \

kubectl create -n development-tools secret generic elastic-credentials --from-literal=password=$$password --from-literal=username=elastic

4. Create s3-access-secret to store and retrive snapshots from AWS S3 bucket.

kubectl create -n development-tools secret generic s3-access-secret --from-literal=s3.client.default.access_key=<your AWS access key> --from-literal=s3.client.default.secret_key=<your AWS secret key>5. Create the storage class.

kubectl -n development-tools apply -f ./storageclass.yaml6. Now Install the helm charts to setup Elasticsearch with 3 Masters, 5 Data nodes and 3 clients.

helm upgrade --install elasticsearch ./helm-charts/elasticsearch --set imageTag=7.9.2 --namespace development-toolshelm upgrade --install data-node ./helm-charts/elasticsearch --namespace development-tools --set imageTag=7.9.2 -f helm-charts/elasticsearch/values-node.yamlhelm upgrade --install client ./helm-charts/elasticsearch --namespace development-tools --set imageTag=7.9.2 -f helm-charts/elasticsearch/client.yaml

7. To setup Kibana use below commands:

## If ssl enabled:encryptionkey=$$(docker run --rm busybox:1.31.1 /bin/sh -c "< /dev/urandom tr -dc _A-Za-z0-9 | head -c50") && kubectl create secret generic kibana --from-literal=encryptionkey=$$encryptionkeyhelm install kibana --namespace development-tools ./helm-charts/kibana --set imageTag=7.9.0echo -n "<32 bit encryption key here>" | base64kubectl edit secret kibana ## update encryption-key

8. Now to install FluentD daemon set Update the ElasticSearch LoadBalancer URL is values file and run below command:

## Update the load balancer URL for ES HOSThelm upgrade --install fleuentd fluentd-elasticsearch

9. To install Elastic hq please run below command

kubectl apply -f helm-charts/elasticsearch/es-hq.yamlEFK Best practises:

Disable swaping

swap memory can compromise Elasticsearch cluster performance in heavy I/O tasks and garbage collection.

File descriptors.

It’s a good practice to increase the limit on the number of open file descriptors for the user running Elasticsearch to 65,536 or higher.

Threads.

Elasticsearch instances should be able to create at least 4096 threads for optimal performance on heavy tasks

Java heap size.

Although the larger heap size the better, usually it’s not recommended to set it above 50% of the RAM because Elasticsearch may need memory for other tasks and a host’s OS can become slow.

Optimizing Elastic for Read and Write Performance

Avoid indexing large documents like books or web pages in a single document. Loading big documents into memory and sending them over the network is slower and more computationally expensive

Use Time-based indices

managing data retention whenever possible

monitor the heap usage on master nodes

the amount of heap is directly proportional to the number of indices, fields per index and shards, it is important to also monitor the heap usage on master nodes and make sure they are sized appropriately.

Avoid having very large shards

this can negatively affect the cluster’s ability to recover from failure. There is no fixed limit on how large shards can be, but a shard size of 50GB is often quoted as a limit that has been seen to work for a variety of use-cases.

forcing smaller segments to merge into larger ones

As the overhead per shard depends on the segment count and size, forcing smaller segments to merge into larger ones through a forcemerge operation can reduce overhead and improve query performance. This should ideally be done once no more data is written to the index.

Do not use Instance-store for Elasticsearch

Though AWS recommend to run EBS as with Instance store we may lose data due to one of below reason:

1. The underlying disk drive fails

2. The instance stops

3. The instance terminate

Do not span a cluster across regions

Limit the number of keywords

The maximum number of fields in an index. Field and object mappings,as well as field aliases count towards this limit. The default value is 1000. Higher values can lead to performance degradations and memory issues, especially in clusters with a high load or few resources.

Give memory to the filesystem cache

The filesystem cache will be used in order to buffer I/O operations. You should make sure to give at least half the memory of the machine running Elasticsearch to the filesystem cache.

Conclusion:

Congratulations, your EFK stack has been successfully deployed!

Please note that this setup is suitable for production environment only when you benchmark your workload, you would need to incorporate authentication for accessing Kibana. Of course, it is not accessible outside of the cluster yet, so you need to create an ingress rule that will configure our Ingress controller to direct traffic to the pod.

Liked the blog? Don’t forget to give me a “clap”